This is the logs from the relay menhera1b (0D1799FDE49AB2498362D9B2D2542309F6E99E30):

Sep 02 18:09:34.000 [notice] Tor 0.4.8.12 opening new log file.

Sep 02 18:09:34.370 [notice] We compiled with OpenSSL 30000020: OpenSSL 3.0.2 15 Mar 2022 and we are running with OpenSSL 30000020: 3.0.2. These two versions should be binary compatible.

Sep 02 18:09:34.371 [notice] Tor 0.4.8.12 running on Linux with Libevent 2.1.12-stable, OpenSSL 3.0.2, Zlib 1.2.11, Liblzma 5.2.5, Libzstd 1.4.8 and Glibc 2.35 as libc.

Sep 02 18:09:34.371 [notice] Tor can't help you if you use it wrong! Learn how to be safe at https://support.torproject.org/faq/staying-anonymous/

Sep 02 18:09:34.371 [notice] Read configuration file "/run/tor-instances/menhera1b.defaults".

Sep 02 18:09:34.371 [notice] Read configuration file "/etc/tor/instances/menhera1b/torrc".

Sep 02 18:09:34.372 [notice] Based on detected system memory, MaxMemInQueues is set to 2929 MB. You can override this by setting MaxMemInQueues by hand.

Sep 02 18:09:34.373 [notice] Opening OR listener on 0.0.0.0:9001

Sep 02 18:09:34.373 [notice] Opened OR listener connection (ready) on 0.0.0.0:9001

Sep 02 18:09:34.373 [notice] Opening OR listener on [::]:9001

Sep 02 18:09:34.373 [notice] Opened OR listener connection (ready) on [::]:9001

Sep 02 18:09:34.000 [notice] Parsing GEOIP IPv4 file /usr/share/tor/geoip.

Sep 02 18:09:34.000 [notice] Parsing GEOIP IPv6 file /usr/share/tor/geoip6.

Sep 02 18:09:34.000 [notice] Configured to measure statistics. Look for the *-stats files that will first be written to the data directory in 24 hours from now.

Sep 02 18:09:34.000 [notice] You are running a new relay. Thanks for helping the Tor network! If you wish to know what will happen in the upcoming weeks regarding its usage, have a look at https://blog.torproject.org/lifecycle-of-a-new-relay

Sep 02 18:09:34.000 [notice] It looks like I need to generate and sign a new medium-term signing key, because I don't have one. To do that, I need to load (or create) the permanent master identity key. If the master identity key was not moved or encrypted with a passphrase, this will be done automatically and no further action is required. Otherwise, provide the necessary data using 'tor --keygen' to do it manually.

Sep 02 18:09:34.000 [notice] Your Tor server's identity key fingerprint is 'menhera1b 0D1799FDE49AB2498362D9B2D2542309F6E99E30'

Sep 02 18:09:34.000 [notice] Your Tor server's identity key ed25519 fingerprint is 'menhera1b HTx3lLRiCF9uFRqLxv5J1U1p1izSqC98wc2+hQuabTQ'

Sep 02 18:09:34.000 [notice] Bootstrapped 0% (starting): Starting

Sep 02 18:09:34.000 [notice] Starting with guard context "default"

Sep 02 18:09:34.000 [notice] Signaled readiness to systemd

Sep 02 18:09:35.000 [notice] Opening Control listener on /run/tor-instances/menhera1b/control

Sep 02 18:09:35.000 [notice] Opened Control listener connection (ready) on /run/tor-instances/menhera1b/control

Sep 02 18:09:35.000 [notice] Unable to find IPv4 address for ORPort 9001. You might want to specify IPv6Only to it or set an explicit address or set Address.

Sep 02 18:09:35.000 [notice] Bootstrapped 5% (conn): Connecting to a relay

Sep 02 18:09:35.000 [notice] Bootstrapped 10% (conn_done): Connected to a relay

Sep 02 18:09:36.000 [notice] Bootstrapped 14% (handshake): Handshaking with a relay

Sep 02 18:09:36.000 [notice] Bootstrapped 15% (handshake_done): Handshake with a relay done

Sep 02 18:09:36.000 [notice] Bootstrapped 20% (onehop_create): Establishing an encrypted directory connection

Sep 02 18:09:37.000 [notice] Bootstrapped 25% (requesting_status): Asking for networkstatus consensus

Sep 02 18:09:37.000 [notice] Bootstrapped 30% (loading_status): Loading networkstatus consensus

Sep 02 18:09:39.000 [notice] I learned some more directory information, but not enough to build a circuit: We have no usable consensus.

Sep 02 18:09:39.000 [notice] Bootstrapped 40% (loading_keys): Loading authority key certs

Sep 02 18:09:39.000 [notice] The current consensus has no exit nodes. Tor can only build internal paths, such as paths to onion services.

Sep 02 18:09:39.000 [notice] Bootstrapped 45% (requesting_descriptors): Asking for relay descriptors

Sep 02 18:09:39.000 [notice] I learned some more directory information, but not enough to build a circuit: We need more microdescriptors: we have 0/7561, and can only build 0% of likely paths. (We have 0% of guards bw, 0% of midpoint bw, and 0% of end bw (no exits in consensus, using mid) = 0% of path bw.)

Sep 02 18:09:40.000 [notice] We'd like to launch a circuit to handle a connection, but we already have 32 general-purpose client circuits pending. Waiting until some finish.

Sep 02 18:09:41.000 [notice] Bootstrapped 50% (loading_descriptors): Loading relay descriptors

Sep 02 18:09:41.000 [notice] The current consensus contains exit nodes. Tor can build exit and internal paths.

Sep 02 18:09:44.000 [notice] Bootstrapped 55% (loading_descriptors): Loading relay descriptors

Sep 02 18:09:45.000 [notice] Bootstrapped 60% (loading_descriptors): Loading relay descriptors

Sep 02 18:09:45.000 [notice] Bootstrapped 65% (loading_descriptors): Loading relay descriptors

Sep 02 18:09:46.000 [notice] Bootstrapped 70% (loading_descriptors): Loading relay descriptors

Sep 02 18:09:46.000 [notice] Bootstrapped 75% (enough_dirinfo): Loaded enough directory info to build circuits

Sep 02 18:09:46.000 [notice] Bootstrapped 80% (ap_conn): Connecting to a relay to build circuits

Sep 02 18:09:46.000 [notice] Bootstrapped 85% (ap_conn_done): Connected to a relay to build circuits

Sep 02 18:09:47.000 [notice] Bootstrapped 89% (ap_handshake): Finishing handshake with a relay to build circuits

Sep 02 18:09:47.000 [notice] Bootstrapped 90% (ap_handshake_done): Handshake finished with a relay to build circuits

Sep 02 18:09:47.000 [notice] Bootstrapped 95% (circuit_create): Establishing a Tor circuit

Sep 02 18:09:48.000 [notice] Bootstrapped 100% (done): Done

Sep 02 18:17:50.000 [notice] New control connection opened.

Sep 02 18:21:31.000 [notice] New control connection opened.

Sep 02 18:26:02.000 [notice] New control connection opened.

Sep 02 18:28:09.000 [notice] New control connection opened.

Sep 02 18:30:36.000 [notice] External address seen and suggested by a directory authority: 43.228.174.250

Sep 02 18:49:35.000 [warn] Your server has not managed to confirm reachability for its ORPort(s) at 43.228.174.250:9001 and [2001:df3:14c0:ff00::2]:9001. Relays do not publish descriptors until their ORPort and DirPort are reachable. Please check your firewalls, ports, address, /etc/hosts file, etc.

Sep 02 18:59:46.000 [notice] New control connection opened.

Sep 02 19:09:35.000 [warn] Your server has not managed to confirm reachability for its ORPort(s) at 43.228.174.250:9001 and [2001:df3:14c0:ff00::2]:9001. Relays do not publish descriptors until their ORPort and DirPort are reachable. Please check your firewalls, ports, address, /etc/hosts file, etc.

Sep 02 19:28:39.000 [notice] New control connection opened.

Sep 02 19:29:35.000 [warn] Your server has not managed to confirm reachability for its ORPort(s) at 43.228.174.250:9001 and [2001:df3:14c0:ff00::2]:9001. Relays do not publish descriptors until their ORPort and DirPort are reachable. Please check your firewalls, ports, address, /etc/hosts file, etc.

Sep 02 19:39:31.000 [notice] New control connection opened.

Sep 02 19:49:35.000 [warn] Your server has not managed to confirm reachability for its ORPort(s) at 43.228.174.250:9001 and [2001:df3:14c0:ff00::2]:9001. Relays do not publish descriptors until their ORPort and DirPort are reachable. Please check your firewalls, ports, address, /etc/hosts file, etc.

Sep 02 20:00:08.000 [notice] Self-testing indicates your ORPort 43.228.174.250:9001 is reachable from the outside. Excellent.

Sep 02 20:04:41.000 [notice] Now checking whether IPv6 ORPort [2001:df3:14c0:ff00::2]:9001 is reachable... (this may take up to 20 minutes -- look for log messages indicating success)

Sep 02 20:04:45.000 [notice] Self-testing indicates your ORPort [2001:df3:14c0:ff00::2]:9001 is reachable from the outside. Excellent. Publishing server descriptor.

Sep 02 20:05:03.000 [notice] Your network connection speed appears to have changed. Resetting timeout to 60000ms after 18 timeouts and 1000 buildtimes.

Sep 02 20:05:08.000 [notice] Performing bandwidth self-test...done.

Sep 02 20:17:33.000 [notice] New control connection opened.

Sep 02 22:32:36.000 [notice] No circuits are opened. Relaxed timeout for circuit 3300 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 60000ms. However, it appears the circuit has timed out anyway.

Sep 02 23:47:07.000 [notice] No circuits are opened. Relaxed timeout for circuit 4707 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 162380ms. However, it appears the circuit has timed out anyway.

Sep 03 00:00:27.000 [notice] Received reload signal (hup). Reloading config and resetting internal state.

Sep 03 00:00:27.000 [notice] Read configuration file "/run/tor-instances/menhera1b.defaults".

Sep 03 00:00:27.000 [notice] Read configuration file "/etc/tor/instances/menhera1b/torrc".

Sep 03 00:00:27.000 [notice] Tor 0.4.8.12 opening log file.

Sep 03 00:09:35.000 [notice] Heartbeat: Tor's uptime is 6:00 hours, with 7 circuits open. I've sent 194.13 MB and received 238.19 MB. I've received 1046 connections on IPv4 and 340 on IPv6. I've made 654 connections with IPv4 and 99 with IPv6.

Sep 03 00:09:35.000 [notice] While bootstrapping, fetched this many bytes: 582343 (consensus network-status fetch); 13358 (authority cert fetch); 6687244 (microdescriptor fetch)

Sep 03 00:09:35.000 [notice] While not bootstrapping, fetched this many bytes: 20714132 (server descriptor fetch); 1080 (server descriptor upload); 829595 (consensus network-status fetch); 19725 (authority cert fetch); 345328 (microdescriptor fetch)

Sep 03 00:09:35.000 [notice] Circuit handshake stats since last time: 0/0 TAP, 1294/1294 NTor.

Sep 03 00:09:35.000 [notice] Since startup we initiated 0 and received 0 v1 connections; initiated 0 and received 0 v2 connections; initiated 0 and received 0 v3 connections; initiated 0 and received 0 v4 connections; initiated 618 and received 1324 v5 connections.

Sep 03 00:09:35.000 [notice] Heartbeat: DoS mitigation since startup: 0 circuits killed with too many cells, 0 circuits rejected, 0 marked addresses, 0 marked addresses for max queue, 0 same address concurrent connections rejected, 0 connections rejected, 0 single hop clients refused, 0 INTRODUCE2 rejected.

Sep 03 01:53:35.000 [notice] No circuits are opened. Relaxed timeout for circuit 6456 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 60000ms. However, it appears the circuit has timed out anyway. [12 similar message(s) suppressed in last 7500 seconds]

Sep 03 01:55:53.000 [notice] Guard Liberation ($BBEAFF24A9D3406DCC95484DEB3B7DDF98A99980) is failing more circuits than usual. Most likely this means the Tor network is overloaded. Success counts are 118/170. Use counts are 0/0. 118 circuits completed, 0 were unusable, 0 collapsed, and 65 timed out. For reference, your timeout cutoff is 60 seconds.

Sep 03 06:09:35.000 [notice] Heartbeat: Tor's uptime is 12:00 hours, with 9 circuits open. I've sent 261.75 MB and received 302.29 MB. I've received 3562 connections on IPv4 and 1110 on IPv6. I've made 1258 connections with IPv4 and 243 with IPv6.

Sep 03 06:09:35.000 [notice] While bootstrapping, fetched this many bytes: 582343 (consensus network-status fetch); 13358 (authority cert fetch); 6687244 (microdescriptor fetch)

Sep 03 06:09:35.000 [notice] While not bootstrapping, fetched this many bytes: 25681944 (server descriptor fetch); 1080 (server descriptor upload); 1128513 (consensus network-status fetch); 43060 (authority cert fetch); 421776 (microdescriptor fetch)

Sep 03 06:09:35.000 [notice] Circuit handshake stats since last time: 0/0 TAP, 3607/3607 NTor.

Sep 03 06:09:35.000 [notice] Since startup we initiated 0 and received 0 v1 connections; initiated 0 and received 0 v2 connections; initiated 0 and received 0 v3 connections; initiated 0 and received 0 v4 connections; initiated 1216 and received 4561 v5 connections.

Sep 03 06:09:35.000 [notice] Heartbeat: DoS mitigation since startup: 0 circuits killed with too many cells, 0 circuits rejected, 0 marked addresses, 0 marked addresses for max queue, 0 same address concurrent connections rejected, 0 connections rejected, 0 single hop clients refused, 0 INTRODUCE2 rejected.

Sep 03 07:20:45.000 [notice] Performing bandwidth self-test...done.

Sep 03 12:09:35.000 [notice] Heartbeat: Tor's uptime is 18:00 hours, with 14 circuits open. I've sent 372.08 MB and received 409.18 MB. I've received 5940 connections on IPv4 and 1826 on IPv6. I've made 3093 connections with IPv4 and 727 with IPv6.

Sep 03 12:09:35.000 [notice] While bootstrapping, fetched this many bytes: 582343 (consensus network-status fetch); 13358 (authority cert fetch); 6687244 (microdescriptor fetch)

Sep 03 12:09:35.000 [notice] While not bootstrapping, fetched this many bytes: 30053010 (server descriptor fetch); 1080 (server descriptor upload); 1388338 (consensus network-status fetch); 62805 (authority cert fetch); 481287 (microdescriptor fetch)

Sep 03 12:09:35.000 [notice] Circuit handshake stats since last time: 0/0 TAP, 3536/3536 NTor.

Sep 03 12:09:35.000 [notice] Since startup we initiated 0 and received 0 v1 connections; initiated 0 and received 0 v2 connections; initiated 0 and received 0 v3 connections; initiated 0 and received 0 v4 connections; initiated 3354 and received 7603 v5 connections.

Sep 03 12:09:35.000 [notice] Heartbeat: DoS mitigation since startup: 0 circuits killed with too many cells, 0 circuits rejected, 0 marked addresses, 0 marked addresses for max queue, 0 same address concurrent connections rejected, 0 connections rejected, 0 single hop clients refused, 0 INTRODUCE2 rejected.

Sep 03 13:03:44.000 [notice] No circuits are opened. Relaxed timeout for circuit 15968 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 60000ms. However, it appears the circuit has timed out anyway. [16 similar message(s) suppressed in last 40140 seconds]

Sep 03 14:43:58.000 [notice] No circuits are opened. Relaxed timeout for circuit 17277 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 60000ms. However, it appears the circuit has timed out anyway.

Sep 03 15:18:49.000 [notice] New control connection opened.

Sep 03 16:04:03.000 [notice] New control connection opened.

Sep 03 17:14:50.000 [notice] No circuits are opened. Relaxed timeout for circuit 19242 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 60000ms. However, it appears the circuit has timed out anyway.

Sep 03 18:09:35.000 [notice] Heartbeat: Tor's uptime is 1 day 0:00 hours, with 11 circuits open. I've sent 529.55 MB and received 563.99 MB. I've received 8283 connections on IPv4 and 2559 on IPv6. I've made 5105 connections with IPv4 and 1308 with IPv6.

Sep 03 18:09:35.000 [notice] While bootstrapping, fetched this many bytes: 582343 (consensus network-status fetch); 13358 (authority cert fetch); 6687244 (microdescriptor fetch)

Sep 03 18:09:35.000 [notice] While not bootstrapping, fetched this many bytes: 34494629 (server descriptor fetch); 1620 (server descriptor upload); 1720902 (consensus network-status fetch); 82514 (authority cert fetch); 683869 (microdescriptor fetch)

Sep 03 18:09:35.000 [notice] Circuit handshake stats since last time: 0/0 TAP, 3565/3565 NTor.

Sep 03 18:09:35.000 [notice] Since startup we initiated 0 and received 0 v1 connections; initiated 0 and received 0 v2 connections; initiated 0 and received 0 v3 connections; initiated 0 and received 0 v4 connections; initiated 5765 and received 10626 v5 connections.

Sep 03 18:09:35.000 [notice] Heartbeat: DoS mitigation since startup: 0 circuits killed with too many cells, 0 circuits rejected, 0 marked addresses, 0 marked addresses for max queue, 0 same address concurrent connections rejected, 0 connections rejected, 0 single hop clients refused, 0 INTRODUCE2 rejected.

Sep 03 18:23:33.000 [notice] No circuits are opened. Relaxed timeout for circuit 20143 (a Measuring circuit timeout 3-hop circuit in state doing handshakes with channel state open) to 60000ms. However, it appears the circuit has timed out anyway.

Sep 03 19:18:49.000 [notice] Performing bandwidth self-test...done.

Sep 03 20:13:42.000 [notice] Our directory information is no longer up-to-date enough to build circuits: We're missing descriptors for 1/3 of our primary entry guards (total microdescriptors: 7700/7720). That's ok. We will try to fetch missing descriptors soon.

Sep 03 20:13:42.000 [notice] I learned some more directory information, but not enough to build a circuit: We're missing descriptors for 1/3 of our primary entry guards (total microdescriptors: 7700/7720). That's ok. We will try to fetch missing descriptors soon.

Sep 03 20:13:43.000 [notice] We now have enough directory information to build circuits.

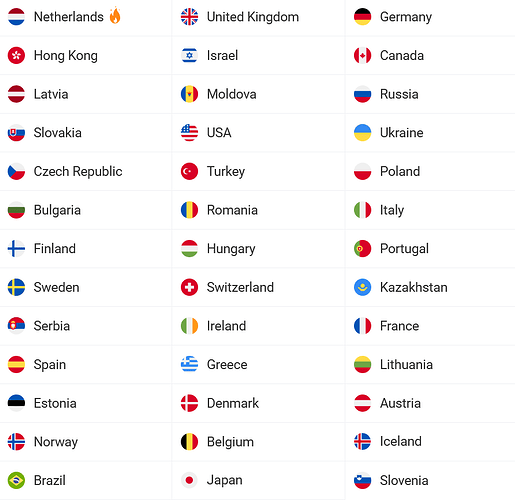

The OONI result is: Tor censorship test result in Japan

Fetching https://proof.ovh.net/files/100Mb.dat through Tor via this newer one of our relays as the ‘bridge’ from the same network, the throughput is around 2000kBytes/s, multiple times consistently.