On Saturday, March 22nd, 2025 at 11:52 AM, boldsuck via tor-relays <tor-relays@lists.torproject.org> wrote:

On Tuesday, 18 March 2025 18:31 Tor at 1AEO via tor-relays wrote:

> Listed general information below. What other information is helpful?

>

> Didn't want to log but seems will need something to troubleshoot issues.

> Will work on metricsport, prometheus and grafana. From a quick glance

> through htop sorting by memory and restarting the 7 relays at the top, ~4GB

> of RAM frees up (3 in RAM and 1 in Swap) per relay.

>

> 66 Tor relays (all middle/guard). All less than 40 days old.

I have 2x10G:

80 instances (40 guards/40 bridges) using 130G RAM

Welcome to the Internet, I suspect DDoS. Do you have ip/nftables?

With routed IPs I have no conntrack / no table filter

Systemli Paste

On other 1G servers I have dynamic NFT rules.

Search for information about YOUR 10G network card driver. e.g:

pre-up /sbin/ethtool commands may be required.

> General Setup:

> EPYC 7702P.

>

> 256GB RAM

>

> Software Versions:

> Tor version 0.4.8.14

> Ubuntu OS 24.04.2

>

> Tor configuration:

> SOCKSPort 0

SocksPolicy reject *

^

# I'm paranoid  and I don't need ControlPort

and I don't need ControlPort

ControlPort 0

> ControlPort xxx.xxx.xxx.xxx:xxx

> HashedControlPassword xxx

>

> ORPort xxx.xxx.xxx.xxx:xxxxx

> Address xxx.xxx.xxx.xxx

> OutboundBindAddress xxx.xxx.xxx.xxx

RelayBandwidthRate 100 MBytes

RelayBandwidthBurst 200 MBytes

> Nickname ...

>

> ContactInfo ...

>

> MyFamily...

> ExitPolicy reject :

Only a hint if you have several dozen relays, you can use one file. eg:

## Include MyFamily & ContactInfo

%include /etc/tor/torrc.all

## Include Exit Policy

%include /etc/tor/torrc.exit

> On Tuesday, March 18th, 2025 at 3:44 AM, mail--- via tor-relays tor-relays@lists.torproject.org wrote:

>

> > Hi,

> >

> > To be honest I think something might be wrong. Maybe some memory leak or

> > another issue because 66 relays with such low bandwidth shouldn't even

> > come close to a memory footprint of 256 GB. We use different operating

> > systems and most likely also slightly different relay configurations, but

> > our relays use significantly less memory. You shouldn't need more than

> > 128 GB of memory for ~10 Gb/s of Tor traffic, although 256 GB is

> > recommended for some headroom for attacks and spikes and such.

> >

> > Could you share your general setup, software versions and Tor

> > configuration? Perhaps someone on this mailinglist will be able to help

> > you. Also using node_exporter and MetricsPort (for example with a few

> > Grafana dashboards) would probably yield valuable information about this

> > excessive memory footprint. For example: if the memory footprint

> > increases linearly over time, it might be some software memory leak that

> > requires a fix.

> >

> > Cheers,

> >

> > tornth

> >

> > Mar 18, 2025, 10:21 by tor-relays@lists.torproject.org:

> >

> > > Somewhat of a surprise based on the 2-4x RAM to core/threads/relay ratio

> > > in this email thread, ran out of 256GB RAM with 66 Tor relays (roughly

> > > ~4x ratio).

> > >

> > > Something misconfigured or this expected as part of the relay ramping up

> > > behavior or just regular relay behavior?

> > >

> > > Summary: 64 cores / 128 threads (EPYC 7702P) running 66 Tor relays (all

> > > middle/guard), Tor version 0.4.8.14, used 256GB RAM and went to swap, on

> > > a 10 Gbps unmetered connection.

> > >

> > > Details:

> > >

> > > - ~30 relays are 30 days old and within 24 hours of adding ~30 new

> > > relays, used up 256GB RAM.

> > >

> > > - Average Advertised Bandwidth is ~3 MiB/s per 33 relays and the other 33

> > > are unmeasured / not listed advertised bandwidth yet.

> > >

> > > - Swap was set at default 8GB and maxed out. Changed to 256G temporarily.

> > > Swap usage is slowly climbing and reached 20G within first hour of

> > > increasing size.

> > >

> > > - Ubuntu 24.04.2 LTS default server install.

> > >

> > > - Nothing else running.

> > >

> > > - Will upgrade RAM this server to 768GB within next few days

> > >

> > > Have a different server, with 88 cores/threads and ~88 relays, all less

> > > than 30 days old, hovering around 240GB RAM, same average advertised

> > > bandwidth of ~3 MiB/s per half the relays, but have already upgraded RAM

> > > to 384GB and plan to take it to 512GB within the next week or two. Same

> > > Ubuntu and Tor versions and software configuration.

> > >

> > > Will keep sharing back as more relays and servers ramp up traffic.

> > >

> > > > On Tuesday, February 18th, 2025 at 11:23 PM, mail--- via tor-relays tor-relays@lists.torproject.org wrote:

> > > >

> > > > > Hi,

> > > > >

> > > > > Many people already replied, but here are my (late) two cents.

> > > > >

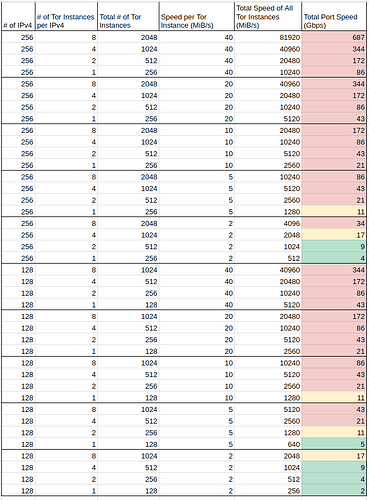

> > > > > > 1) If a full IPv4 /24 Class C was available to host Tor relays, what

> > > > > > are some optimal ways to allocate bandwidth, CPU cores and RAM to

> > > > > > maximize utilization of the IPv4 /24 for Tor?>>>>

> > > > > > "Optimal" depends on your preferences and goals. Some examples:

> > > > >

> > > > > - IP address efficiency: run 8 relays per IPv4 address.

> > > > > - Use the best ports: 256 relays (443) or 512 relays (443+80).

> > > > > - Lowest kernel/system congestion: 1 locked relay per core/SMT thread

> > > > > combination, ideally on high clocked CPUs. - Easiest to manage: as few

> > > > > relays as possible.

> > > > > - Memory efficiency: only run middle relays on very high clocked CPUs

> > > > > (4-5 Ghz). - Cost efficiency: run many relays on 1-2 generations old

> > > > > Epyc CPUs with a high core count (64 or more).

> > > > >

> > > > > There are always constraints. The hardware/CPU/memory and

> > > > > bandwidth/routing capability available to you are probably not

> > > > > infinite. Also the Tor Project maximizes bandwidth contributions to

> > > > > 20% and 10% for exit relay and overall consensus weight respectively.

> > > > >

> > > > > With 256 IP addresses on modern hardware, it will be very hard to not

> > > > > run in to one of these limitations long before you can make it

> > > > > 'optimal'. Hardware wise, one modern/current gen high performance

> > > > > server only running exit relays will easily push enough Tor traffic to

> > > > > do more than half of the total exit bandwidth of the Tor network.

> > > > >

> > > > > My advice would be:

> > > > > 1) Get the fastest/best hardware with current-ish generation CPU IPC

> > > > > capabilities you can get within your budget. To lower complexity with

> > > > > keeping congestion in control, one socket is easier to deal with than

> > > > > a dual socket system.

> > > > >

> > > > > (tip for NIC: if your switch/router has 10 Gb/s or 25 Gb/s ports, get

> > > > > some of the older Mellanox cards. They are very stable (more so than

> > > > > their Intel counterparts in my experience) and extremely affordable

> > > > > nowadays because of all the organizations that throw away their

> > > > > digital sovereignty and privacy of their employees/users to move to

> > > > > the cloud).

> > > > >

> > > > > 3) Start with 1 Tor relay per physical core (ignoring SMT). When the

> > > > > Tor relays have ramped up (this takes 2-3 months for guard relays) and

> > > > > there still is considerable headroom on the CPU (Tor runs extremely

> > > > > poorly at scale sadly, so this would be my expectation) then move to 1

> > > > > Tor relay per thread (SMT included).

> > > > >

> > > > > (tip: already run/'train' some Tor relays with a very limited bandwidth

> > > > > (2 MB/s or something) parallel to your primary ones and pin them all

> > > > > to 1-2 cores to let them ramp up in parallel to your primary ones.

> > > > > This makes it much less cumbersome to scale up your Tor contribution

> > > > > when you need/want/can do that in the future).

> > > > >

> > > > > 4) Assume at least 1 GB of RAM per relay on modern CPUs + 32 GB

> > > > > additionally for OS, DNS, networking and to have some headroom for DoS

> > > > > attacks. This may sound high, especially considering the advice in the

> > > > > Tor documentation. But on modern CPUs (especially with a high

> > > > > clockspeed) guard relays can use a lot more than 512 MB of RAM,

> > > > > especially when they are getting attacked. Middle and exit relays

> > > > > require less RAM.

> > > > >

> > > > > Don't skimp out on system memory capacity. DDR4 RDIMMs with decent

> > > > > clockspeeds are so cheap nowadays. For reference: we ran our smaller

> > > > > Tor servers (16C@3.4Ghz) with 64 GB of RAM and had to upgrade it to

> > > > > 128 GB because during attacks RAM usage exceeded the amount available

> > > > > and killed processes.

> > > > >

> > > > > 5) If you have the IP space available, use one IPv4 address per relay

> > > > > and use all the good ports such as 443. If IP addresses are more

> > > > > scarce, it's also not bad to run 4 or 8 relays per IP address.

> > > > > Especially for middle and exit relays the port doesn't matter (much).

> > > > > Guard relays should ideally always run on a generally used (and

> > > > > generally unblocked) port.>>>>

> > > > >

> > > > > > 2) If a full 10 Gbps connection was available for Tor relays, how many

> > > > > > CPU cores, RAM and IPv4 addresses would be required to saturate the

> > > > > > 10 Gbps connection?>>>>

> > > > > > That greatly depends on the CPU and your configuration. I can offer 3

> > > > > > references based on real world examples. They all run a mix of

> > > > > > guard/middle/exit relays.

> > > > >

> > > > > 1) Typical low core count (16+SMT) with higher clockspeed (3.4 Ghz)

> > > > > saturates a 10 Gb/s connection with ~18.5 physical cores + SMT. 2)

> > > > > Typical higher core count (64+SMT) with lower clockspeed (2.25 Ghz)

> > > > > saturates a 10 Gb/s connection with ~31.5 physical cores + SMT. 3)

> > > > > Typical energy efficient/low performance CPU with low core count (16)

> > > > > with very low clockspeed (2.0 Ghz) used often in networking appliances

> > > > > saturates a 10 Gb/s connection with ~75 physical cores (note: no SMT).

> > > > >

> > > > > The amount of IP addresses required also depends on multiple factors.

> > > > > But I'd say that you would need between the amount and double the

> > > > > amount of relays of the mentioned core+SMT count in order to saturate

> > > > > 10 Gb/s. This would be 37-74, 63-126 and 75-150 relays respectively.

> > > > > So between 5 and 19 IPv4 addresses would be required at minimum,

> > > > > depending on CPU performance level.

> > > > >

> > > > > RAM wise the more relays you run, the more RAM overhead you will have.

> > > > > So in general it's better to run less relays at a higher speed each

> > > > > than run many at a low clock speed. But since Tor scales so badly you

> > > > > need more Relays anyway so optimizing this isn't easy in practice.>>>>

> > > > >

> > > > > > 3) Same for a 20 Gbps connection, how many CPU cores, RAM and IPv4

> > > > > > addresses are required to saturate?>>>>

> > > > > > Double the amount compared to 10 Gb/s.

> > > > >

> > > > > Good luck with your Tor adventure. And let us know your findings with

> > > > > achieving 10 Gb/s when you get there :-).

> > > > >

> > > > > Cheers,

> > > > >

> > > > > tornth

> > > > >

> > > > > Feb 3, 2025, 18:14 by tor-relays@lists.torproject.org:

> > > > >

> > > > > > Hi All,

> > > > > >

> > > > > > Looking for guidance around running high performance Tor relays on

> > > > > > Ubuntu.

> > > > > >

> > > > > > Few questions:

> > > > > > 1) If a full IPv4 /24 Class C was available to host Tor relays, what

> > > > > > are some optimal ways to allocate bandwidth, CPU cores and RAM to

> > > > > > maximize utilization of the IPv4 /24 for Tor?

> > > > > >

> > > > > > 2) If a full 10 Gbps connection was available for Tor relays, how many

> > > > > > CPU cores, RAM and IPv4 addresses would be required to saturate the

> > > > > > 10 Gbps connection?

> > > > > >

> > > > > > 3) Same for a 20 Gbps connection, how many CPU cores, RAM and IPv4

> > > > > > addresses are required to saturate?

> > > > > >

> > > > > > Thanks!

> > > > > >

> > > > > > Sent with Proton Mail secure email.

--

╰_╯ Ciao Marco!

Debian GNU/Linux

It's free software and it gives you freedom!_______________________________________________

tor-relays mailing list -- tor-relays@lists.torproject.org

To unsubscribe send an email to tor-relays-leave@lists.torproject.org