Hi,

I have a strange issue. I run around 19 relays right now, and have done so for a while now. Never seen this before.

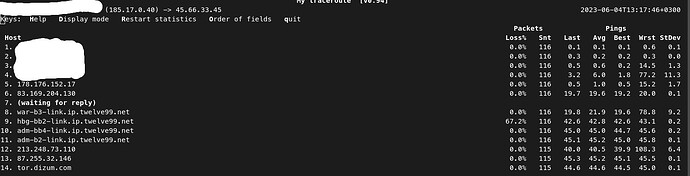

One of my non-exit relays, has descriptor issues. According to Tor, it’s offline. Now, I haven’t changed anything on the server itself, and my hoster (which has always been very helpful and transparant) considers the network to be OK - which it does seem to be.

I tested DNS, IPv4 and IPv6. Bandwidth (10gbit) is fully functional. The logs show nothing out of the ordinary → it should be working!

Even getting a certificate works;

openssl s_client -connect 185.17.0.40:443

Here is a copy of the logs;

Jun 03 16:51:01.000 [notice] Tor 0.4.7.13 opening log file.

Jun 03 16:51:01.757 [notice] We compiled with OpenSSL 101010ef: OpenSSL 1.1.1n 15 Mar 2022 and we are running with OpenSSL 101010ef: 1.1.1n. These two versions should be binary compatible.

Jun 03 16:51:01.758 [notice] Tor 0.4.7.13 running on Linux with Libevent 2.1.12-stable, OpenSSL 1.1.1n, Zlib 1.2.11, Liblzma 5.2.5, Libzstd 1.4.8 and Glibc 2.31 as libc.

Jun 03 16:51:01.759 [notice] Tor can't help you if you use it wrong! Learn how to be safe at https://support.torproject.org/faq/staying-anonymous/

Jun 03 16:51:01.759 [notice] Read configuration file "/usr/share/tor/tor-service-defaults-torrc".

Jun 03 16:51:01.759 [notice] Read configuration file "/etc/tor/torrc".

Jun 03 16:51:01.760 [warn] Configuration port ORPort 443 superseded by ORPort [2a0e:d606:0:99::2]:443

Jun 03 16:51:01.760 [notice] Based on detected system memory, MaxMemInQueues is set to 1478 MB. You can override this by setting MaxMemInQueues by hand.

Jun 03 16:51:01.760 [notice] By default, Tor does not run as an exit relay. If you want to be an exit relay, set ExitRelay to 1. To suppress this message in the future, set ExitRelay to 0.

Jun 03 16:51:01.761 [warn] Configuration port ORPort 443 superseded by ORPort [2a0e:d606:0:99::2]:443

Jun 03 16:51:01.762 [notice] Opening Socks listener on 127.0.0.1:9050

Jun 03 16:51:01.762 [notice] Opened Socks listener connection (ready) on 127.0.0.1:9050

Jun 03 16:51:01.762 [notice] Opening OR listener on 0.0.0.0:443

Jun 03 16:51:01.762 [notice] Opened OR listener connection (ready) on 0.0.0.0:443

Jun 03 16:51:01.762 [notice] Opening OR listener on [2a0e:d606:0:99::2]:443

Jun 03 16:51:01.762 [notice] Opened OR listener connection (ready) on [2a0e:d606:0:99::2]:443

Jun 03 16:51:02.000 [notice] Parsing GEOIP IPv4 file /usr/share/tor/geoip.

Jun 03 16:51:02.000 [notice] Parsing GEOIP IPv6 file /usr/share/tor/geoip6.

Jun 03 16:51:02.000 [notice] Configured to measure statistics. Look for the *-stats files that will first be written to the data directory in 24 hours from now.

Jun 03 16:51:02.000 [notice] Your Tor server's identity key fingerprint is 'cozybeardev 23DE335EC403E4BE64AEBCE2029AD8C8C94EF4E5'

Jun 03 16:51:02.000 [notice] Your Tor server's identity key ed25519 fingerprint is 'cozybeardev oh4T8V98baEwx/8TQdmZhiBopHd9kPujOcJO0Atv2og'

Jun 03 16:51:02.000 [notice] Bootstrapped 0% (starting): Starting

Jun 03 16:51:03.000 [notice] Starting with guard context "default"

Jun 03 16:51:10.000 [notice] Signaled readiness to systemd

Jun 03 16:51:11.000 [notice] Bootstrapped 5% (conn): Connecting to a relay

Jun 03 16:51:11.000 [notice] Opening Socks listener on /run/tor/socks

Jun 03 16:51:11.000 [notice] Opened Socks listener connection (ready) on /run/tor/socks

Jun 03 16:51:11.000 [notice] Opening Control listener on /run/tor/control

Jun 03 16:51:11.000 [notice] Opened Control listener connection (ready) on /run/tor/control

Jun 03 16:51:11.000 [notice] Bootstrapped 10% (conn_done): Connected to a relay

Jun 03 16:51:11.000 [notice] Bootstrapped 14% (handshake): Handshaking with a relay

Jun 03 16:51:11.000 [notice] Bootstrapped 15% (handshake_done): Handshake with a relay done

Jun 03 16:51:11.000 [notice] Bootstrapped 75% (enough_dirinfo): Loaded enough directory info to build circuits

Jun 03 16:51:11.000 [notice] Bootstrapped 90% (ap_handshake_done): Handshake finished with a relay to build circuits

Jun 03 16:51:11.000 [notice] Bootstrapped 95% (circuit_create): Establishing a Tor circuit

Jun 03 16:51:11.000 [notice] Bootstrapped 100% (done): Done

Jun 03 16:51:11.000 [notice] Now checking whether IPv4 ORPort 185.17.0.40:443 is reachable... (this may take up to 20 minutes -- look for log messages indicating success)

Jun 03 16:51:11.000 [notice] Now checking whether IPv6 ORPort [2a0e:d606:0:99::2]:443 is reachable... (this may take up to 20 minutes -- look for log messages indicating success)

Jun 03 16:51:12.000 [notice] Self-testing indicates your ORPort [2a0e:d606:0:99::2]:443 is reachable from the outside. Excellent.

Jun 03 16:51:26.000 [notice] New control connection opened.

Jun 03 16:51:27.000 [notice] Self-testing indicates your ORPort 185.17.0.40:443 is reachable from the outside. Excellent. Publishing server descriptor.

Jun 03 16:58:13.000 [notice] Performing bandwidth self-test...done.

Jun 03 18:29:33.000 [notice] New control connection opened.

Jun 03 19:06:02.000 [notice] New control connection opened.

Jun 03 22:51:11.000 [notice] Heartbeat: It seems like we are not in the cached consensus.

Jun 03 22:51:11.000 [notice] Heartbeat: Tor's uptime is 6:00 hours, with 11 circuits open. I've sent 176.67 MB and received 170.66 MB. I've received 1593 connections on IPv4 and 209 on IPv6. I've made 894 connections with IPv4 and 185 with IPv6.

Jun 03 22:51:11.000 [notice] While not bootstrapping, fetched this many bytes: 4943895 (server descriptor fetch); 1905 (server descriptor upload); 375684 (consensus network-status fetch); 37417 (microdescriptor fetch)

Jun 03 22:51:11.000 [notice] Circuit handshake stats since last time: 0/0 TAP, 424/424 NTor.

Jun 03 22:51:11.000 [notice] Since startup we initiated 0 and received 0 v1 connections; initiated 0 and received 0 v2 connections; initiated 0 and received 0 v3 connections; initiated 0 and received 318 v4 connections; initiated 684 and received 506 v5 connections.

Jun 03 22:51:11.000 [notice] Heartbeat: DoS mitigation since startup: 0 circuits killed with too many cells, 0 circuits rejected, 0 marked addresses, 0 marked addresses for max queue, 0 same address concurrent connections rejected, 0 connections rejected, 0 single hop clients refused, 0 INTRODUCE2 rejected.

The relay in question may be found here;

https://metrics.torproject.org/rs.html#details/23DE335EC403E4BE64AEBCE2029AD8C8C94EF4E5

Consensus here;

https://consensus-health.torproject.org/consensus-health-2023-06-04-06-00.html#23DE335EC403E4BE64AEBCE2029AD8C8C94EF4E5

Does anybody have any tips on where to go from here, because I sure don’t…