I want to share some progress on PT experiments in Shadow.

Updates to Shadow

The Shadow team implemented support for exec and fork system calls, meaning that pluggable transports can be run in the usual way, by including a ClientTransportPlugin and ServerTransportPlugin line in the client and server torrc files with the exec option.

ptnettools

I’ve been working on a utility, called ptnettools, that’s designed to be used together with tornettools to set up Shadow experiments for Tor Pluggable Transports and simulate them together with a sampled Tor network.

It currently supports three different transports: obfs4, webtunnel, and snowflake. The script adds a PT bridge and any necessary additional infrastructure, and turns every performance client into a PT performance client configured to use that single bridge. For experiments generated by tornettools, this defaults to 100 PT clients.

Snowflake experimental results

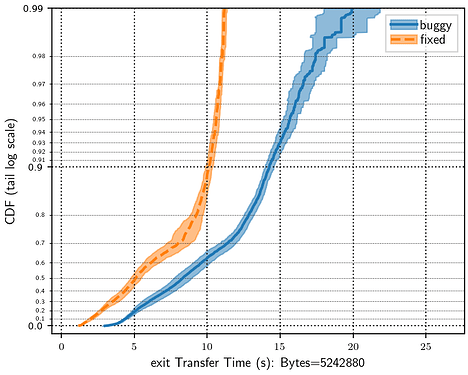

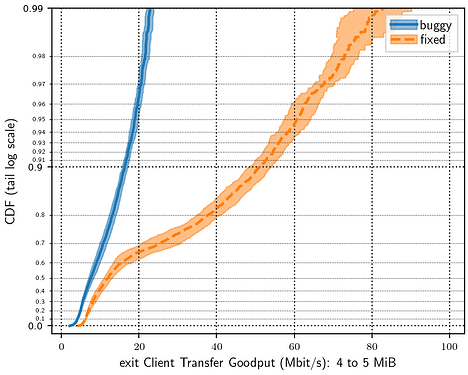

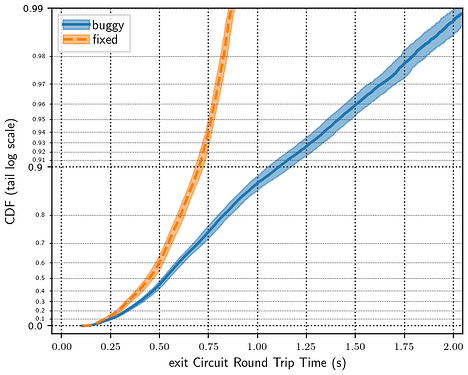

The Snowflake experiments generated by ptnettools have the broker, proxies, bridge, STUN server, and probetest server all running on the same network model node. There are 25 proxies in the simulation (meaning each proxy handles an average of 4 clients by default). This does not match the real Snowflake deployment, but I wanted to run a simple simulation to validate that Snowflake is running correctly in Shadow. I chose the TLS traffic mixing bug as a test case, against the most recent version of Snowflake, to see whether Shadow simulations would show a significant performance impact. Each of these test cases were run with 10 different 10% samples of the Tor network.

The results were pretty much what I’d expect, though I’m surprised at the visibility of the bug with only 100 simultaneous users of Snowflake. That we had many more users at the time the bug was introduced suggests that the performance impact was much greater than what is shown here. Experiments with the bug showed significantly longer transfer times and decreased client goodput compared to experiments with the current version of Snowflake. These results are encouraging and suggest that Snowflake is running well in Shadow and we can use Shadow to test changes to Snowflake that affect network performance.

Steps to reproduce

Unfortunately, simulating 10% of the Tor network requires a significant amount of RAM and each simulation can take several days, depending on the amount of parallelism allowed by the host machine. Special care should be taken to ensure that system limits are configured correctly for large experiments. These experiments were run on a RIPPLE machine with 36 cores for each simulation and took 13 hours to complete (each). I don’t know what the minimum requirements for a 10% Tor network are, but given how long these experiments took to run, it would be very difficult to do so on a laptop also used for daily use.

The first step is to install and set up tornettools, and all related binaries and utilities. After that, the Snowflake experiments can be set up as follows:

-

Build the buggy and current versions of Snowflake. The buggy version will require several patches to run in Shadow. I pushed a branch with all of the required patches applied. The current version of Snowflake will require a single patch to work in Shadow. Make sure to apply it before compiling. The experiments I ran were at commit 96a02f80, with the patch applied.

The ptnettools script will expect all Snowflake binaries to be in a single folder. See the Snowflake instructions for ptnettools for more information on how to prepare these binaries. I suggest the following layout:

.local/bin/snowflake/ ├── buggy │ ├── broker │ ├── client │ ├── probetest │ ├── proxy │ ├── server │ └── stund └── fixed ├── broker ├── client ├── probetest ├── proxy ├── server └── stund -

Stage the network model and process CollecTor data

Follow the tornettools instructions for downloading CollecTor and metrics data, downloading the network model, compiling tor, and staging everything for experiment generation.

-

Generate 10% Tor network experiments, copy them for each test case, and modify with ptnettools

mkdir buggy mkdir fixed for i in `seq 0 9`; do tornettools generate \ relayinfo_staging_2023-04-01--2023-04-30.json \ userinfo_staging_2023-04-01--2023-04-30.json \ networkinfo_staging.gml \ tmodel-ccs2018.github.io \ --network_scale 0.1 \ --prefix tornet-0.1-$i cp -r tornet-0.1-$i buggy/ cp -r tornet-0.1-$i fixed/ ./ptnettools.py --path buggy/tornet-0.1-$i --transport snowflake --transport-bin-path ~/.local/bin/snowflake/buggy ./ptnettools.py --path fixed/tornet-0.1-$i --transport snowflake --transport-bin-path ~/.local/bin/snowflake/fixed done -

Simulate each experiment

for i in `seq 0 9`; do tornettools simulate -a '--parallelism=[num of cores] --seed=666 --template-directory=shadow.data.template --model-unblocked-syscall-latency=true' buggy/tornet-0.1-$i tornettools simulate -a '--parallelism=[num of cores] --seed=666 --template-directory=shadow.data.template --model-unblocked-syscall-latency=true' fixed/tornet-0.1-$i done -

Parse and plot the data

for i in `seq 0 9`; do tornettools parse buggy/tornet-0.1-$i tornettools parse fixed/tornet-0.1-$i done tornettools plot "buggy" "fixed"

Next steps

This was a good validation check that things are running correctly, but much of what was outlined as future work in my previous post is still left to do.

In particular:

Modelling instability in transnational internet links

I wrote a quick script for updating a network model to introduce a 10% packet loss rate for all unidirectional links from outside China to inside China, as suggested in Characterizing Transnational Internet Performance and the Great Bottleneck of China.

My plan is to run some Snowflake performance experiments with all performance PT clients outside of China vs. inside of China, and compare the results to the findings in https://gitlab.torproject.org/tpo/anti-censorship/pluggable-transports/snowflake/-/issues/40251. If the results match what we expect, then Shadow could be a useful tool for analyzing the proposed switch to unreliable channels.

Sampling the snowflake network

The Snowflake experiments generated by ptnettools only run 25 Snowflake proxies and do not do any sampling of the Snowflake network. The next step there is to sample from snowflake statistics to locate Snowflake proxies in the network graph. It would be difficult to simulate the web-based Snowflake proxies in Shadow, so this sampling will be imperfect since all of the proxies will be standalone proxies in Shadow.